Configure rate limiting on a Node.js REST API with Express

Photo by Chris Leipelt / Unsplash

Today many SaaS applications allow to be integrated into other applications; this is achieved by exposing a public API to developers to interact with the system. Since your API is exposed, developers cans send requests as they want, and it is essential to prevent that because your system can be overloaded for the wrong reason. This is why you need rate limiting.

Why do you need rate limiting?

- Pricing usage: You can allow a range of calls to your public API based on pricing. The upcoming calls are throttled when a client reaches the maximum number of calls.

- DDOS: Your API can be overloaded by thousands of calls from bots across the globe.

- The unexpected cost for Serverless public API; since the pricing model is pay-as-you-go? The more you receive requests, the higher your bill cost will be.

- Protect yourself from bad API consumers because even well-intentioned developers can badly integrate your API into their applications. E.g., A bug that causes an infinite loop of API calls.

Prerequisites

You need these tools installed on your computer to follow this tutorial.

- Node.js 12+ - Download's link

- NPM or Yarn - I will use Yarn

- Docker - Download's link

We will configure the rate limiting on a project that uses MongoDB to store data, so we need Docker to run a container for MongoDB.

Run the command below to start a Docker container from the MongoDB image you can find in the Docker Hub.

docker run -d -e MONGO_INITDB_ROOT_USERNAME=user -e MONGO_INITDB_ROOT_PASSWORD=secret --name mongodb mongo:5.0

Setup the project

As a project example, we will use a Node.js Rest API we build in these two previous articles below:

Let's clone the project and run it locally:

git clone https://github.com/tericcabrel/blog-tutorials

cd blog-tutorials/node-rest-api-swagger

yarn install

cp .env.example .env

yarn start

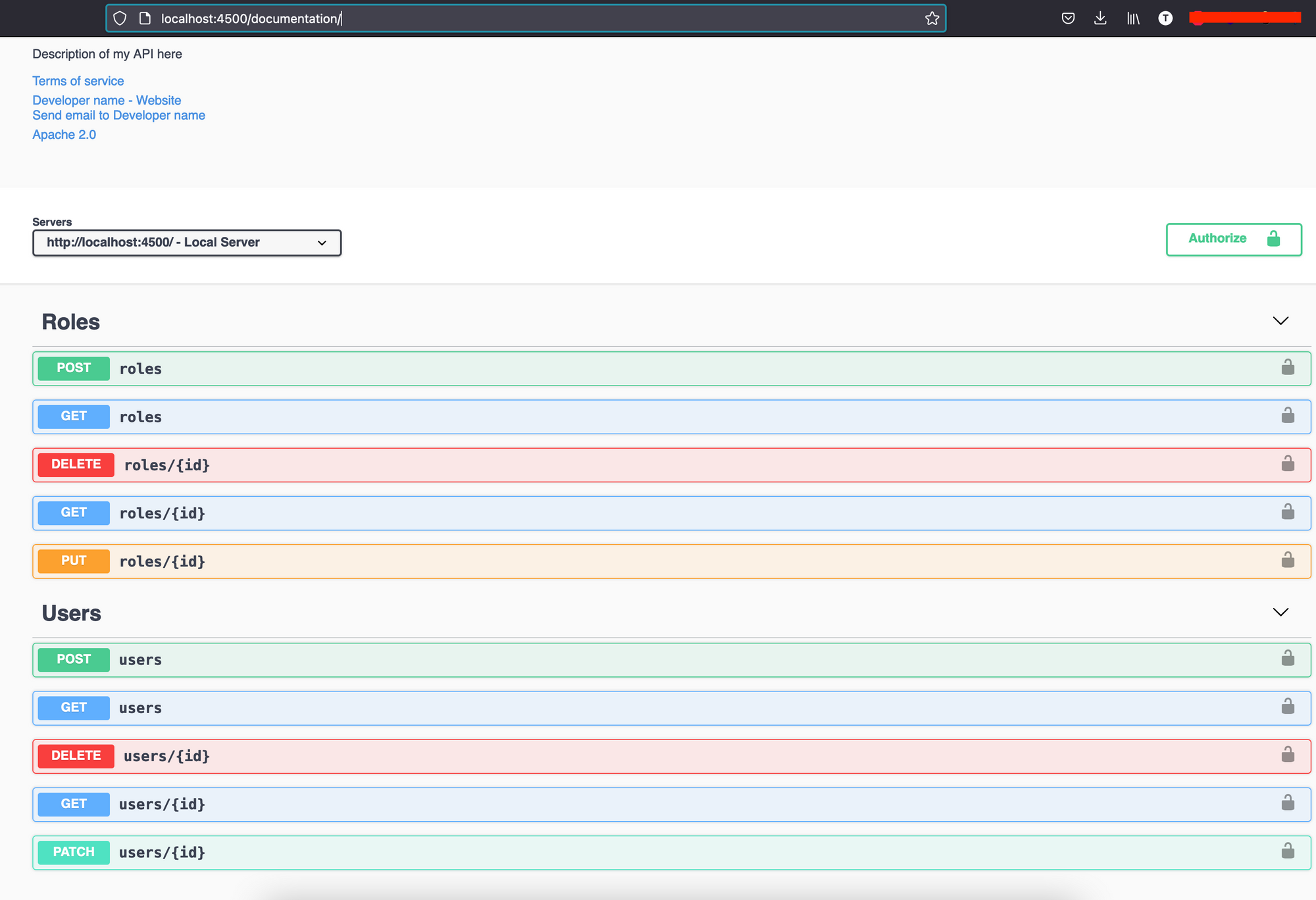

Open your browser and navigate to http://localhost:4500/documentation, and you will see a page will all available endpoints.

To visualize the problem with a non-rate-limited API, let's create a bot that sends requests to our backend indefinitely. create a file src/bot.ts and add the code below:

import axios, { AxiosError } from 'axios';

(async () => {

let requestCount = 0;

while (true) {

try {

const response = await axios.get('http://localhost:4500/users');

console.log('Request ', ++requestCount, ' => Success => Status code: ', response.status);

} catch (e: unknown) {

if (e instanceof AxiosError) {

console.log('Request ', ++requestCount, ' => Failed => Status code: ', e.response?.status);

}

}

}

})();

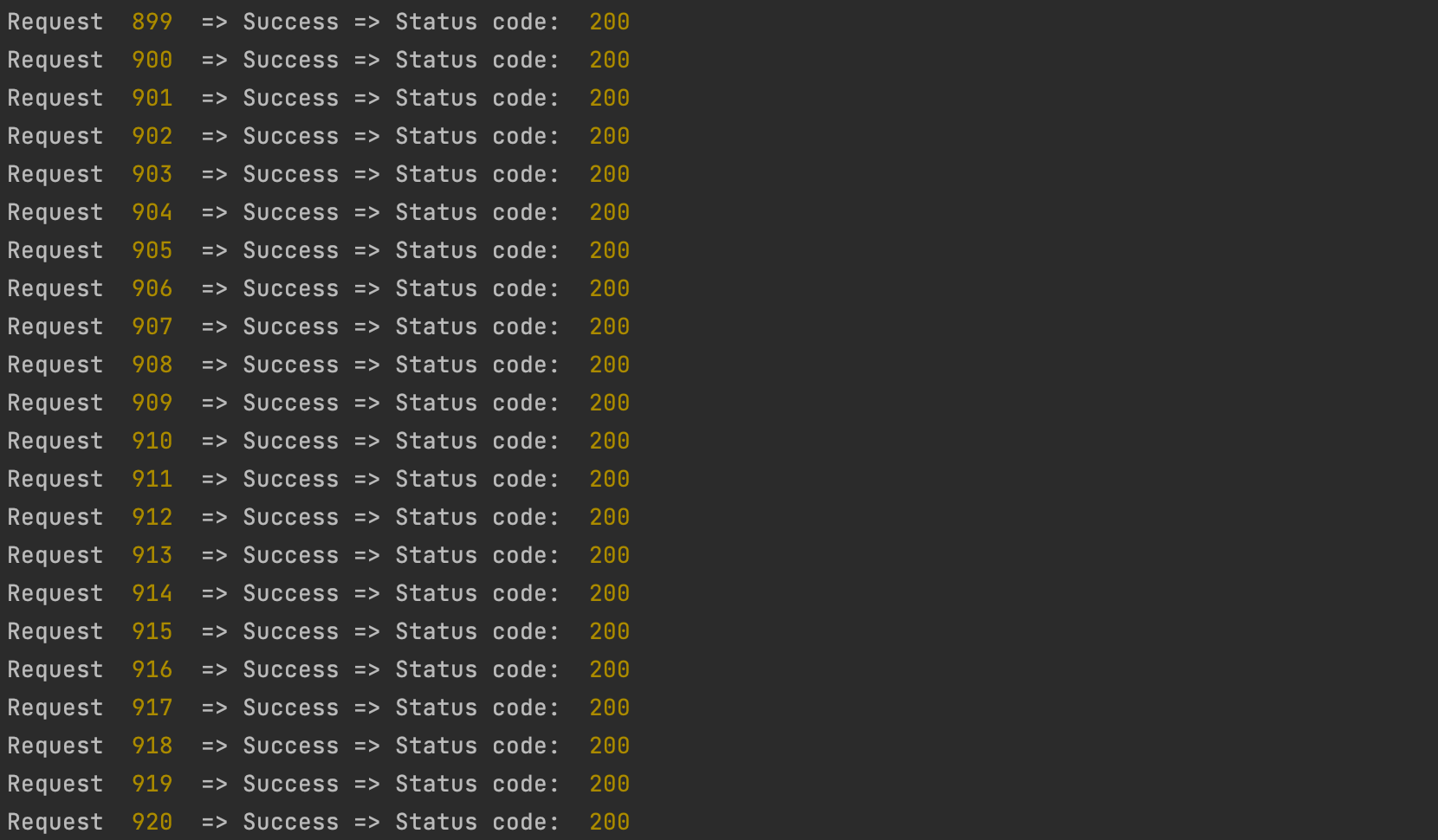

Open a new terminal from the project root directory and run the command: yarn ts-node src/bot.ts

I stopped the script after 5 seconds; 920 requests have been made. Imagine your application receiving this number of requests for days or weeks 😰.

Configure the Rate limit

To set up a rate limiter, we need to define two parameters:

- The consumer ID: It is the unique identifier associated with a client consuming our API. The IP address is the most used, and we will use it in our case

- Request per window: The maximum number of requests to execute in a specific timeframe.

Example: My API has a rate limit of 100 requests in 15 minutes.

If a client makes the first call at 2:00pm and makes his 100th request at 2:05pm, he will wait until 2:15pm for a new batch of 100 requests to make until 2:30pm.

Implementation

There are many libraries for implementing this in Node.js; we will use the Node library called: rate-limiter-flexible. Let's install it:

yarn add rate-limiter-flexible

The rate limit check must be performed before the request hits the endpoint, which means it will be an Express middleware.

Create a file src/rate-limiter-middleware and add the code below:

import { NextFunction, Request, Response } from 'express';

import { IRateLimiterOptions, RateLimiterMemory } from 'rate-limiter-flexible';

const MAX_REQUEST_LIMIT = 100;

const MAX_REQUEST_WINDOW = 15 * 60; // Per 15 minutes by IP

const TOO_MANY_REQUESTS_MESSAGE = 'Too many requests';

const options: IRateLimiterOptions = {

duration: MAX_REQUEST_WINDOW,

points: MAX_REQUEST_LIMIT,

};

const rateLimiter = new RateLimiterMemory(options);

export const rateLimiterMiddleware = (req: Request, res: Response, next: NextFunction) => {

rateLimiter

.consume(req.ip)

.then(() => {

next();

})

.catch(() => {

res.status(429).json({ message: TOO_MANY_REQUESTS_MESSAGE });

});

};

Register the middleware in the file src/index.ts

// ......

import { rateLimiterMiddleware } from './rate-limiter-middleware';

// ......

app.use(rateLimiterMiddleware);

app.use('/', roleRoute());

app.use('/', userRoute());

app.use('/documentation', swaggerUi.serve, swaggerUi.setup(apiDocumentation));

// .....Note: The middleware is registered before the registration of the routes; the order matter.

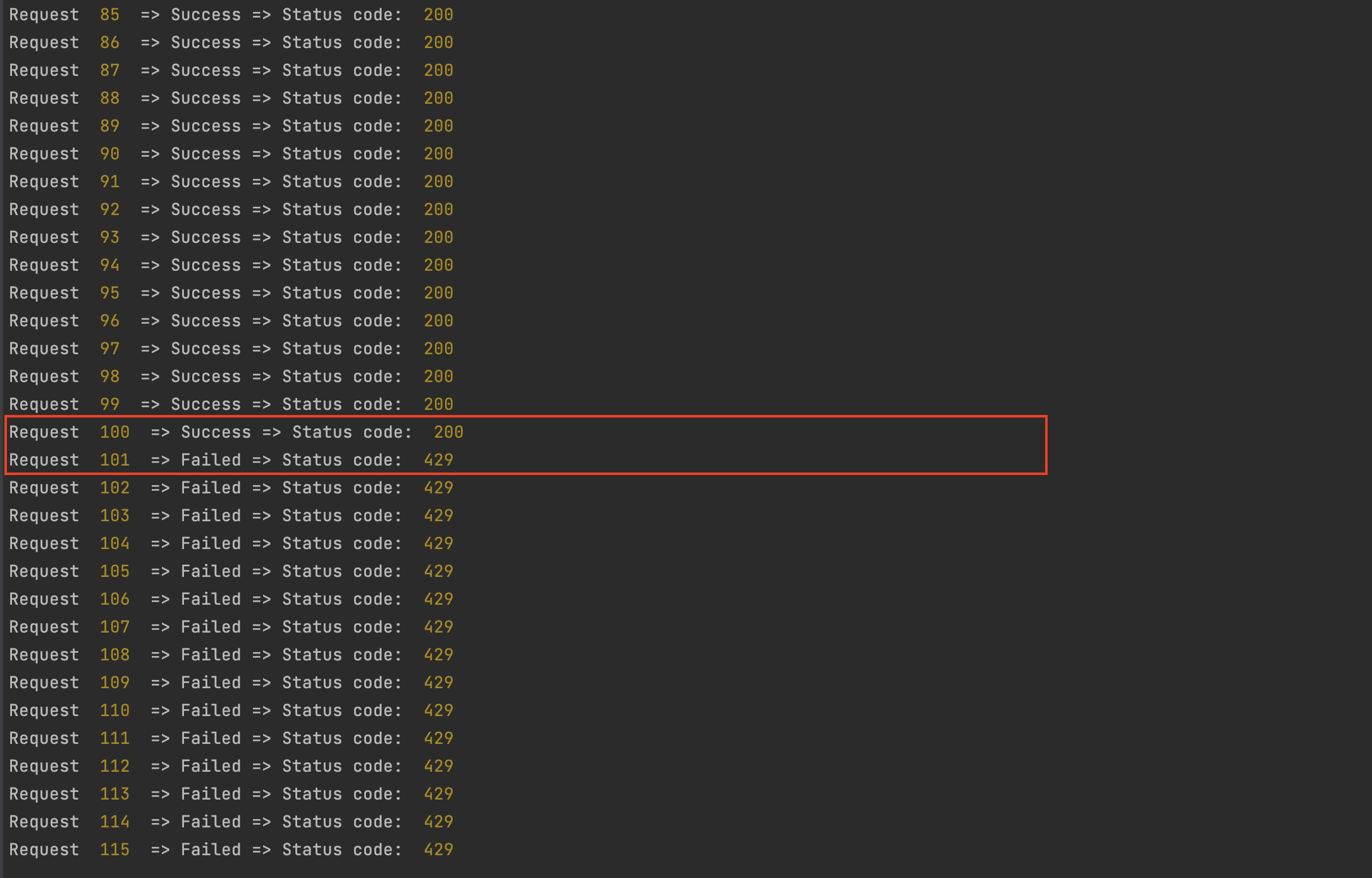

Rerun the application, go to another terminal and rerun the bot.ts:

As we can see, after the 100th request, the following requests are blocked 🎉

Send Rate limit information to the client

For some clients, it can be interesting to know the number of remaining requests or timeframe so they can handle better the calls they send to the API.

In the header of every response, we can send four details:

- The number of seconds to wait before making new calls.

- The maximum number of requests for the current timeframe.

- The number of remaining requests for the current timeframe.

- The date at which the rate limit will be reset for this client's IP address.

Update the file src/rate-limiter-middleware.ts with the code below:

import { NextFunction, Request, Response } from 'express';

import { IRateLimiterOptions, RateLimiterMemory } from 'rate-limiter-flexible';

const MAX_REQUEST_LIMIT = 100;

const MAX_REQUEST_WINDOW = 15 * 60; // Per 15 minutes by IP

const TOO_MANY_REQUESTS_MESSAGE = 'Too many requests';

const options: IRateLimiterOptions = {

duration: MAX_REQUEST_WINDOW,

points: MAX_REQUEST_LIMIT,

};

const rateLimiter = new RateLimiterMemory(options);

export const rateLimiterMiddleware = (req: Request, res: Response, next: NextFunction) => {

rateLimiter

.consume(req.ip)

.then((rateLimiterRes) => {

res.setHeader('Retry-After', rateLimiterRes.msBeforeNext / 1000);

res.setHeader('X-RateLimit-Limit', MAX_REQUEST_LIMIT);

res.setHeader('X-RateLimit-Remaining', rateLimiterRes.remainingPoints);

res.setHeader('X-RateLimit-Reset', new Date(Date.now() + rateLimiterRes.msBeforeNext).toISOString());

next();

})

.catch(() => {

res.status(429).json({ message: TOO_MANY_REQUESTS_MESSAGE });

});

};Rerun the application and use a GUI HTTP client like Postman to browse the response header of a request:

The client can use this information to apply a policy for sending calls to API without hitting the call limit.

Express Rate limit data store

The information about rate-limit consumption by IP address is stored in memory, meaning all the data are lost when you stop your application.

Storing data in memory is not convenient when you run multiple backend nodes. What happens when you add or remove a node?

This is why persistent storage is appropriate in a distributed environment. Fortunately, rate-limiter-flexible provides an adapter for Redis, Memcached, MongoDB, MySQL, and PostgreSQL.

The link below shows how to use Redis as data storage:

Wrap up

Setting a rate limiter for public API is crucial to protect it from DDOS, unexpected costs, or usage abuse.

To learn more about the possibility of rate-limiter-flexible, check out this link.

You can find the code source on the GitHub repository.

Follow me on Twitter or subscribe to my newsletter to avoid missing the upcoming posts and the tips and tricks I occasionally share.